As the semester comes close to finished, we now are looking at finishing our first prototype. We wanted to share some both our triumphs and technical problems we’ve had thus far, with Julian covering the coding, Sam covering the 3D environment, and Jeremy the sound design respectively.

On coding the GSR to connect to unity and MAX/MSP

In order to get Unity to function with the GSR a socket had to be established between the two, thus letting them communicate with each other. We graciously received the help of one of our computer engineer colleagues at the lab, Mehdi, who was able to share some of his expertise and provide us with his socket code to complete the task. Upon receiving the GSR’s variables, the next task was to devise an algorithm that could interpret, organize, and distribute the data coming in. The first plan of action was to create a dynamic array that would simply take in the variables then forward them to the subsystems of our project. Our first problem we ran into was storing all the variables that were constantly coming in as after some testing, results showed that Unity would crash after 10 minutes of assimilating and storing data. This was due to the sheer size the dynamic array was becoming, causing a strain on the computer’s memory (it is important to note that the GSR was sending 256 data points a second). The next phase of the algorithm needed a means to estimate, store, and dump data on the go while still retaining the bits of data flooding in by the GSR: a buffer had to be created in order not to lose the data. After discussing the details with Professor Shaw, we came to the conclusion that estimating the data every 5 seconds would cause a flat line effect on the GSR’s reading. We agreed that half a second would be a good time to receive an average from the GSR, thus calculating an average of 128 data points every half a second and then send it to the other subsystems.

Once the buffer was constructed, code was added in order to test how long it would take to add a 128 data points and calculate an average. It turned out the computer could do these calculations in an incredibly small amount of time. The buffer was then changed to use a stack function in order to override itself as soon as a 128 data points had filled the array and the calculation had begun. The only issue now, would the averaging code calculate the average within less than half a second? Time was thus taken for the algorithm to calculate the average and estimated how much data we would be losing without creating a buffer. Turns out we were only losing around 20% of the data, which left us with more than enough data to create an accurate average while having an efficient and rapid code.

Now that we had an efficient algorithm that could add up a 128 data points of data and give us the average without using a buffer and storing all of the GSR’s readings, we were ready to distribute the average to the sub-systems. This is where are next problem arose due to working on multiple platforms. The GSR’s data was being communicated to Unity through a server and Unity acted as the socket. Meaning we could send the GSR average to the Unity sub-systems of our project but the generative sound of the project exists within MaxMSP. This meant we would have to create a multi-socket server, or embed a server within a socket. The multi-socket server was the most time efficient means of distributing data over multiple platforms, but required multithreading programming which was too complicated for our current level of skill. We decided on resorting to constructing an embedded server within the socket(Unity), which takes the average of the GSR data and sends it to MaxMSP. The issue with this method is it causes latency to be present between the moments Unity applies the new average and when MaxMSP applies the new variable thus adding a half a second delay added on top of the generative sound. This is an issue that we must deal with in the tentative phase because human hearing detects sound anomalies at around 10 milliseconds. One quick fix we’ve applied is to create all ambient and environmental sound through Unity. The main issue is that from the users first reading, half a second is lost to calculating the average, then another half a second is lost re-sending the new average to MaxMSP, causing the generative sound to be 1 full second behind the users original reading. User testing will have to be applied to this issue to notice if the users notice a significance inconsistency with the generative sound.

On the 3D environment design and working with Unity

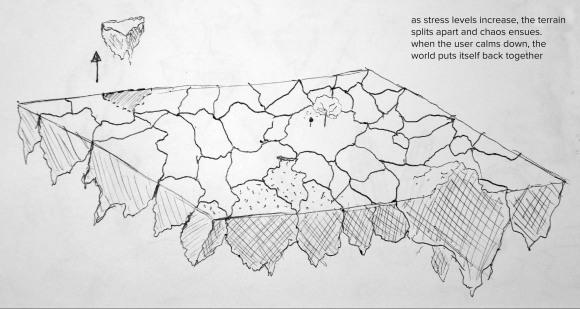

This term we’ve been working on bringing 3D models from Maya into Unity, and writing code in C# to interpret the numeric data coming in from the GSR and cause changes in the environment. For the technical demo a simple ground was created in Maya, cut into about 12 separations that could move around. After bringing these into Unity, a script was written to animate the object to be able to float away from the centre and back, attaching it to each chunk. A 1 to 10 scale was created to represent the ‘chaos level’ of the environment and different chunks were set to animate at different chaos levels. After integrating the code that brings the GSR data into Unity, a script was written to convert the GSR numbers to Unity chaos levels using minimum and maximum values; this system can be calibrated manually to different individuals. How this system works is that as the user attached to the GSR becomes more excited or stressed, the environment becomes more chaotic and more pieces float away. As they calm down, the pieces return. Also added is the ability to switch the program to read fake GSR readings inputed through a slider in Unity for testing purposes when GSR readings are not present. In the below demo video, you can see how the chunks react as I change the chaos level by moving the test input slider.

One of the most challenging aspects was working with bringing in the GSR data into Unity. At first we were receiving the data in Unity much slower than we’d hoped, wanting to calculate the current average every half of a second, but only getting it after several seconds, too slow for a smooth interpretation of the user’s state. We solved the problem when I realized that we had coded it to read at the speed of the GSR (256 data points a second) but the code in Unity is only executed once every frame, at 30 frames per second. Once we adjusted the code to read 30 data points a second it ran just as we expected, and by taking the average of all the points for every half a second, we were able to generate a smooth, accurate representation of the user’s state.

The next steps in this process is to create a larger environment with more chunks and begin adding visual details such as trees and grass. Also to be worked on is a script that will monitor the user’s GSR levels and automatically adjust itself to a suitable minimums and maximums.

On the Generative and Environmental Sound

Thus far in the semester, we have been having a focus on getting the code and visuals to function as a single being, while allowing sound to exist as a side element of the project. While sound will play a bigger part later, what we have discovered is that the sound design of the project will play a very important part on the user’s immersion when the final prototype is created. Some of the reasons for this is that the sound can provide added value to the visual, create mood and atmosphere, and sonically be able to tell the user how their GSR data is being read. As of right now we are using two methods to initialize the sound: Unity using environmental sound and MAX/MSP using generative sound. Using this dual method presents problems: as noted above, a multi socket connection needs to be established to allow MAX/MSP to take in the values. The way we are working to remedy this is to send a UDP signal from Unity to MAX, thus avoiding the multi socket problem.

We currently are focusing on three methods of generative sound: harmonic feedback, binaural beats, and soundscape playback. Harmonic feedback is a term we have created to describe the sound which responds to the users stress levels, and works at the foreground of the user’s listening experience. When the user is operating on low stress, notes of major chords are played back to match the amount of stress the user has, while when they have high stress, minor chords are played at a quicker progression. This relates to mood with major chords being more happy and minors being more sad. Distortion of the audio signal also becomes present with high stress and softness with low stress.

Next we have a binaural beats running through different wave types throughout the experience. Binaural beats can be described as sine waves played back in stereo to a user at different speeds to stimulate different brain states, allowing a person to go deeper into their subconscious and thus deeper into immersion. Were hoping to use this to our advantage and induce our users into these “deeper” states, along with using them to reduce anxiety and stimulate their theta states. A problem we which we might run into could be the user rejecting these sounds but we are hoping that playing them back under the rest of the soundscape will allow for acceptance.

Finally, we have soundscapes being played back consisting of different sounds of nature. These function as ambience for the listener, and will play depending on the stress level of the user. Using a pitch and time stretch program we have coded in MAX, these nature sounds can be pitched up and down to form a harmonic relation to the other sound elements and hopefully add a sense of tranquility and unity to the overall auditorial experience. A technical problem that we currently have with the soundscapes is how to trigger the sounds without it being too jarring for the user, and how these can be transitioned and crossfaded for immediate feedback. We’re hoping that when user tests happen it will become more clear what our course of action should be.

As for environmental sound in Unity, core sounds are being connected the environment, for example rocks shifting, wind, rumbling and meteors flying. These “sync sounds” will make the objects feel less virtual and more real.